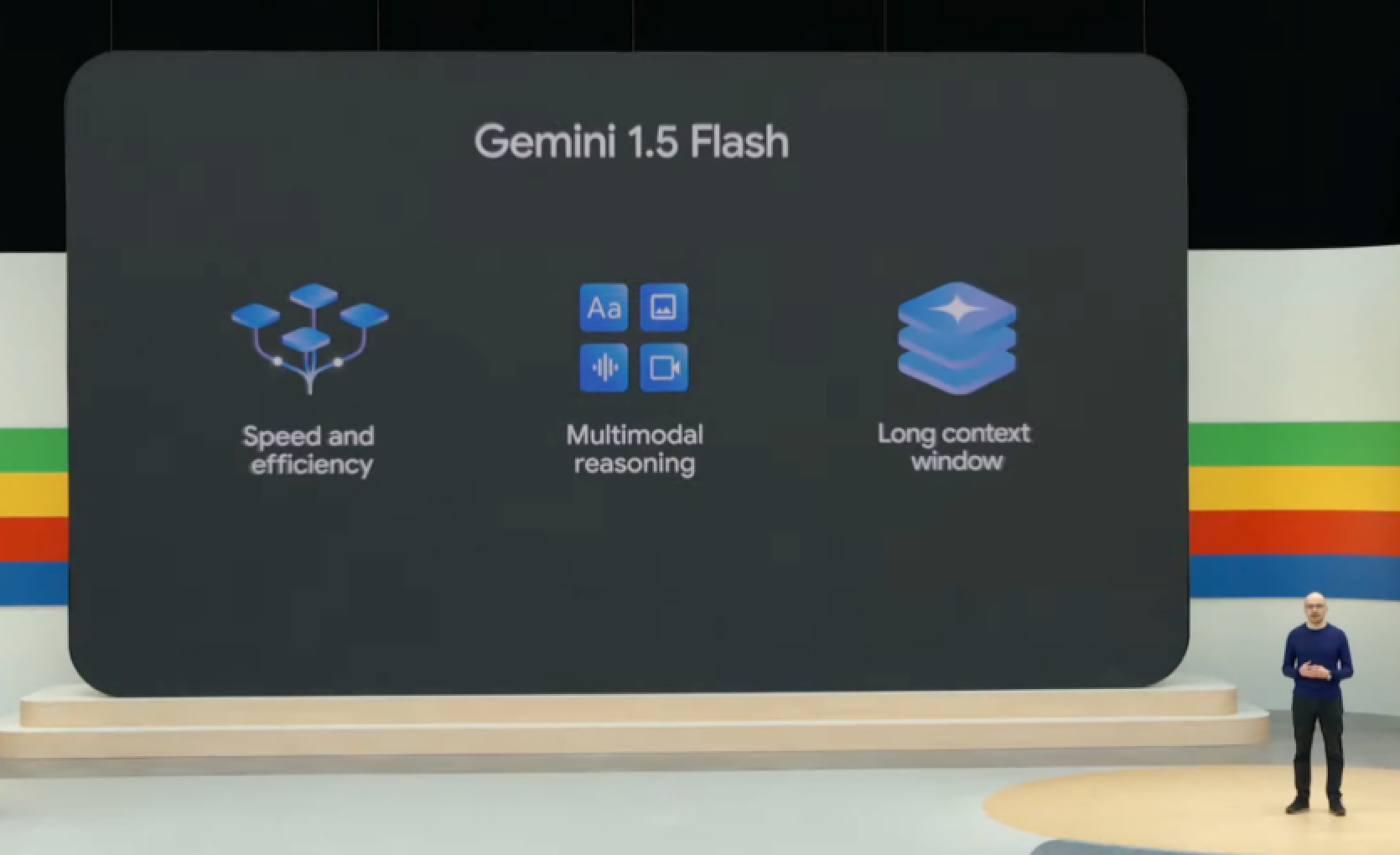

Google has announced the release of Gemini 1.5 Flash - a small multimodal model designed for scaling and solving narrow high-frequency tasks.

The model comes with a "breakthrough" contextual window of 2 million tokens and is available from today in a public preview version through the Gemini API in Google AI Studio.

Gemini 1.5 Pro, which debuted in February, will also receive a contextual window expanded to 2 million tokens. Developers interested in the update should register on the waiting list.

There are some noticeable differences between the models: Gemini 1.5 Flash is designed for those who value output speed, while Gemini 1.5 Pro has larger "weights" and operates similarly to Google 1.0 Ultra.

Josh Woodward, Vice President of Google Labs, noted that developers should use Gemini 1.5 Flash if they want to quickly perform tasks where low latency matters. On the other hand, he explained that Gemini 1.5 Pro is geared towards "more general or complex, often multi-stage tasks with deliberation."

Gemini 1.5 Flash was unveiled literally a day after OpenAI announced GPT-4o - a multimodal LLM available to everyone (and through a separate PC program).

Both Gemini models are available for public viewing in over 200 countries and territories worldwide.

Comments (0)

There are no comments for now