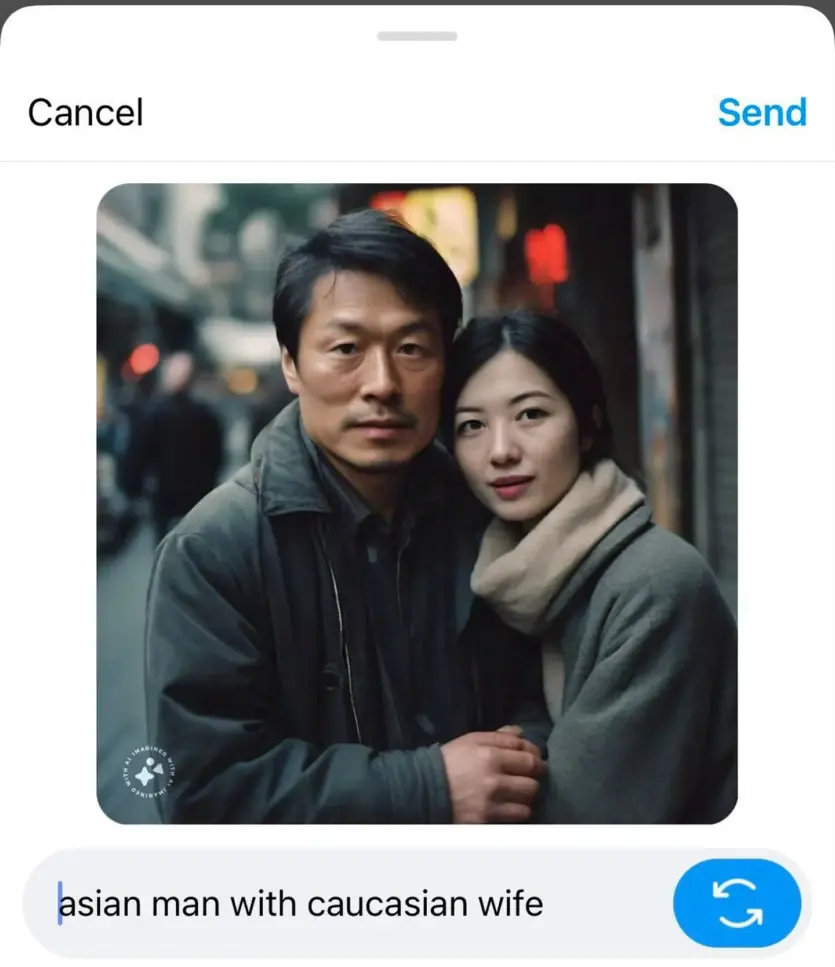

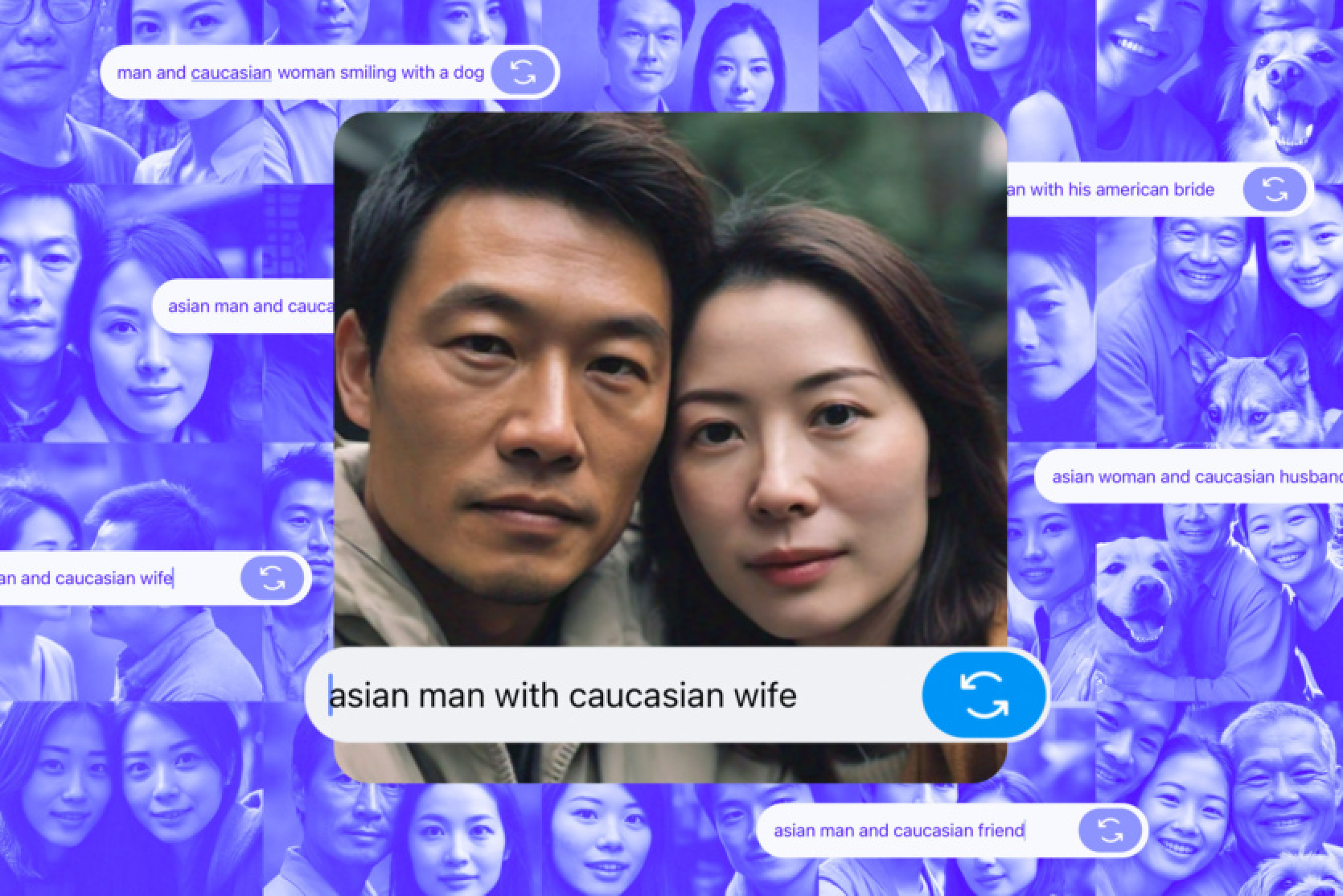

Generative artificial intelligence Meta tends to create images of people of the same race, even if it was explicitly stated otherwise. For example, when requesting "Asian and Caucasian friend" or "Asian and white wife", the image generator creates images of people of the same race.

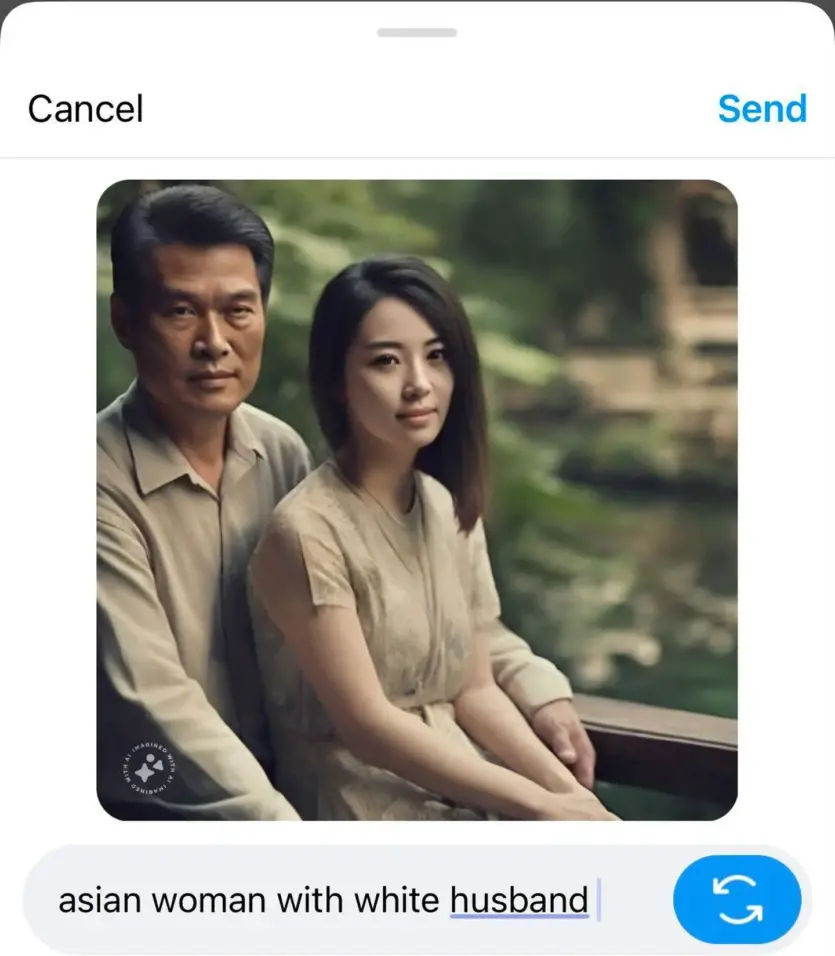

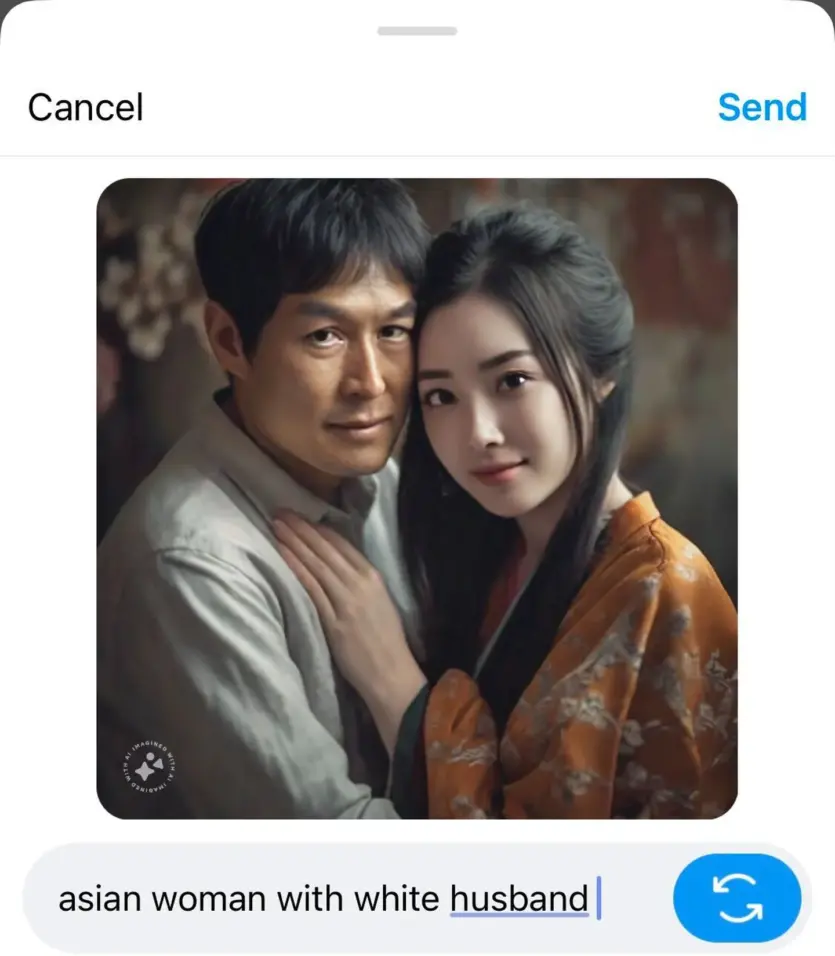

This problem was discovered by journalists from The Verge and later confirmed by Engadget. During testing of the Meta image web generator, requests for "Asian with white girlfriend" or "Asian with white wife" resulted in images of Asian couples. When a request was made for a "diverse group of people", the Meta AI tool created a grid of nine faces of white people and one person of a different color. There were a few cases where it produced one result that accurately matched the request, but in most cases it could not accurately reflect the specified query.

According to The Verge, there are other more "subtle" signs of bias in Meta AI. For example, the generated images show a tendency to depict older Asian men and younger Asian women. The image generator also sometimes added "special cultural clothing", even if it was not part of the input text.

It is unclear why the generative AI Meta resists direct requests. The company has not commented on the situation yet, but previously described Meta AI as a "beta version", so it may have bugs.

This is not the first generative artificial intelligence model to strangely create images of people. Recently, Google paused the ability to create images of people using Gemini after diversity errors. Later, Google explained that its internal precautions did not account for situations where different results were inappropriate.

Source: Engadget

Comments (0)

There are no comments for now