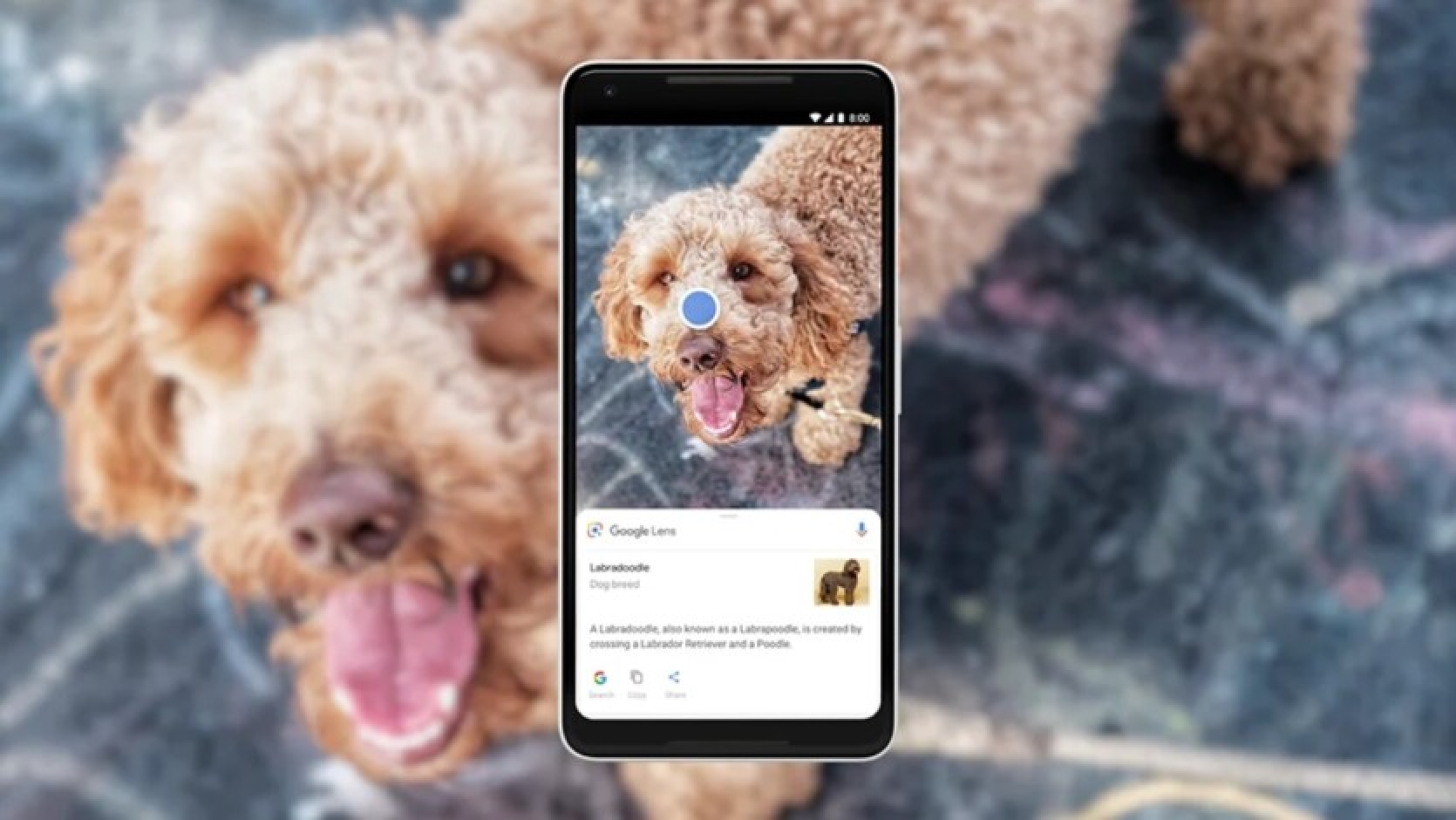

Now Google Lens provides search functionality not only through images but also via video and voice — simply open the camera in the search bar and dictate what you are seeing at that moment.

Since yesterday, this option has been available in Search Labs on Android and iOS — but only in English.

Google first showcased video search at the I/O event in May: in a demonstration, they filmed an aquarium and asked why the fish were swimming in a group, while Google Lens, powered by Gemini AI, quickly provided the relevant answer.

Rajan Patel, Google’s Vice President of Development, explained in a comment to The Verge that Google Lens views video as a series of frames and employs similar computer vision methods as with images (though it additionally utilizes the Gemini AI to analyze the sequence of frames and the existing online answers).

Currently, individual sounds in videos cannot be identified (for instance, Lens won't help you recognize a bird by its song), but Google has stated that they are already experimenting with this feature.

Meanwhile, voice queries in Google Lens have been updated for image searching — now this can be done simultaneously while filming, rather than after processing as was previously the case. This option has already been released for everyone, but it is still only available in English.

Comments (0)

There are no comments for now