During the Game Developers Conference, Nvidia demonstrated how developers can use its artificial intelligence tools for "digital humans" to voice, animate, and create dialogues for video game characters.

Nvidia showed a video of the technical demo of Covert Protocol. It vividly demonstrates how its artificial intelligence tools can allow NPCs to react uniquely to player interactions, generating new responses that correspond to the gameplay.

In the demo version, the player takes on the role of a private detective, completing objectives based on conversations with NPCs controlled by artificial intelligence. Nvidia claims that each playthrough is "unique" because player interactions in real time lead to different game outcomes. John Spitzer, Nvidia's vice president of development and product technology, says that the company's AI technologies "can create complex animations and conversational speech needed to make digital interactions feel real."

The Covert Protocol demonstration does not show how effective these AI-based NPCs are for real gameplay, but rather showcases a collection of NPC clips with different voice lines. The voice delivery and lip-sync animation look robotic, as if a real chatbot is talking to you through the screen.

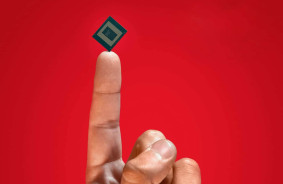

Covert Protocol was created in collaboration with the game startup Inworld AI and using Nvidia's Avatar Cloud Engine (ACE) technology. Inworld plans to release the source code of Covert Protocol "soon" to encourage other developers to use the ACE digital human technology. Inworld also announced a partnership with Microsoft in November 2023 to help develop Xbox tools for creating AI-based characters, stories, and quests.

Nvidia also demonstrated the Audio2Face technology. It was shown in a video of the upcoming MMO World of Jade Dynasty, where the character's lip synchronization with English and Chinese languages was demonstrated. The idea is that Audio2Face will make it easier to create games in multiple languages without manual reanimation of characters. Another video clip of the upcoming action movie Unawake demonstrates how Audio2Face can be used to create facial animations during cinematography and gameplay.

Source: The Verge

Comments (0)

There are no comments for now